Speaking engagements

Tweet about InVision panel, 2019

Screenshot from WiUX Talk, 2022

EThics-BY-DESIGN & AI ETHIcs

Implementing Generative AI for Equity, Ethics, and Inclusion, Dreamforce, San Francisco - September 2023

A Great Resignation Antidote: Human-Centric Customer Service, Connections Conference, Chicago - June 2022

Women in UX, University of Washington - May 2022

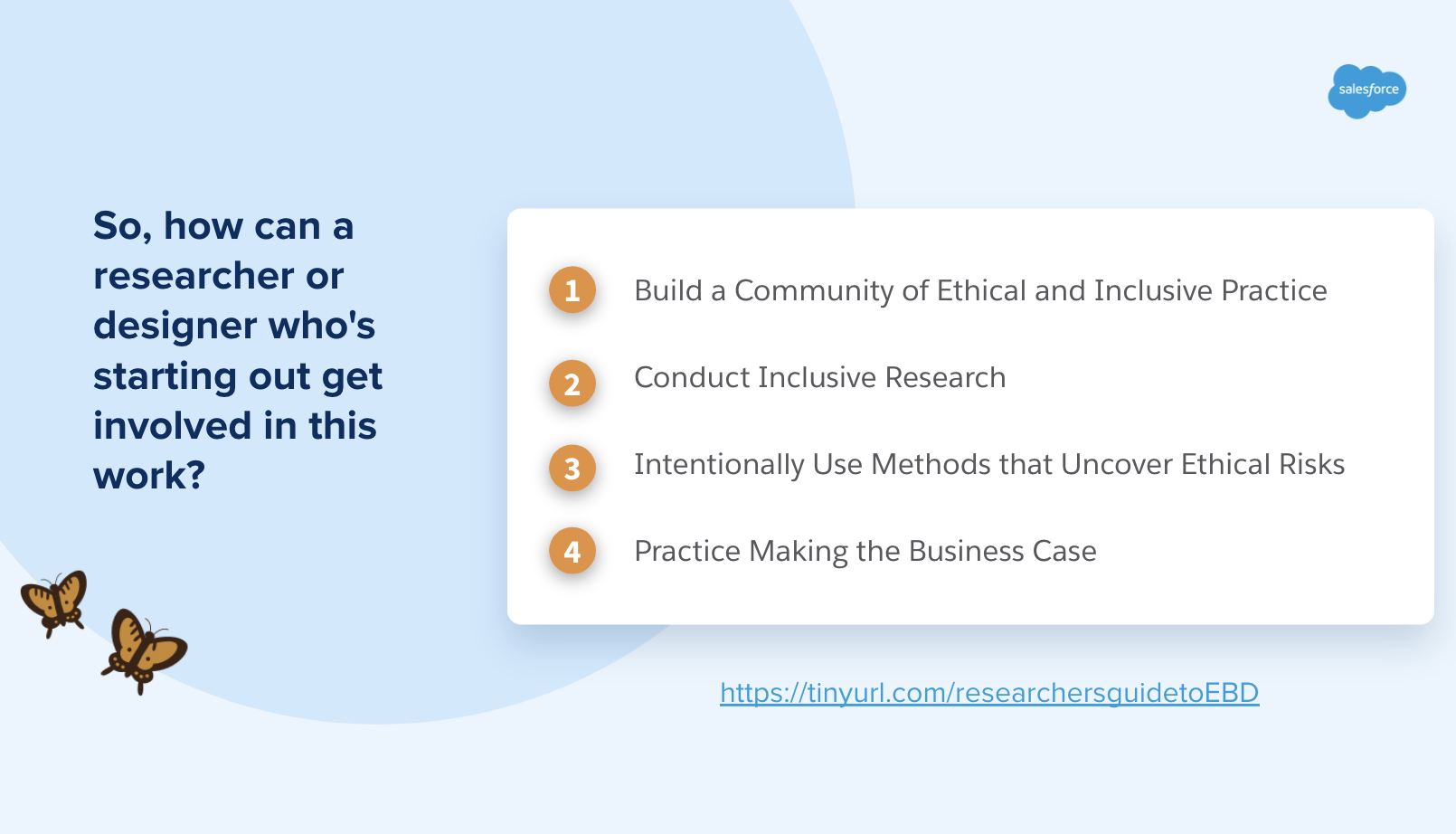

Research for Ethical & Inclusive Products - How to Get Started

InVision DesignPlus Panel - April 2019

Conversation with Leah Buley (VP Experience Research @ InVision), Sarah Alpern (VP Design @ LinkedIn), and Benjamin Earl-Evans (Inclusive Design Lead @ Airbnb).

AI & Responsible Design: Perspectives from Practitioners, UC Berkeley Algorithmic Fairness and Opacity Working Group) - November 2019

Discussion on how designers, UX researchers, and other experts in industry are thinking about ethical and human-centered design for algorithmic systems and products, and how values such as fairness, privacy, transparency, and user engagement are considered in the design process.

w/ Henriette Cramer (Principal Research Scientist and PM @ Spotify, Josh Lovejoy (Principal Design Manager @ Microsoft), and Dan Perkel (Director @ IDEO).

Simons Institute for the Theory of Computing at UC Berkeley - July 2019

Ethics panel during the summer workshop series for Machine Learningists on “Emerging Challenges in Deep Learning” w/ Sharad Goel (Stanford University), Deirdre Mulligan (UC Berkeley), and Alice Xiang (Partnership on AI).

Design//Work: Designing for Artificial Intelligence (American Institute for Graphic Arts) - November 2019

w/ Kristian Simsarian (CCA), Sures Kumar (Designer, People+AI @ Google), Kandice Cota (Design @ Cota Innovation)

Cover slide for Global Accessibility Awareness Day Summit talk

Moderating a panel of fellow Ethical Use Advisory Council members, 2019

SAlesforce internal

Customer & User Research for Inclusive Design at Salesforce - Global Accessibility Awareness Day 2022

What Neurodivergent users can teach us about designing Salesforce Slack apps that work better for everyone - Spring 2022

Moderator, Meet the Ethical & Humane Use Advisory Council @ Salesforce - December 2019

On stage at Dreamforce

improving the user experience

Get Comfortable With Generative AI for Salesforce Admins (Dreamforce 2023)

Overview of generative AI, best practices for centering the human-in-the-loop, and research-based tips for assessing risk & managing change.

Design for the Productivity Your Users Need (Dreamforce 2019)

Where should you focus your energy in order to drive productivity for your users? How do your users define productivity for themselves, and what makes them feel productive? From our work as user researchers, we share what we've learned about how end users define productivity for themselves, and how you can move beyond efficiency metrics to drive real productivity for your users.

w/ Azalea Irani (User Researcher @ Salesforce)

Design Lightning Apps the User Friendly Way (TrailheaDX 2019)

w/ Azalea Irani (User Researcher @ Salesforce)

Persona-Driven Design of Your Salesforce Org (Dreamforce 2018)

w/ Rebecca Sherrill (Senior Director, User Research @ Salesforce)

Configure Salesforce Lightning Pages That Work For Your Users (Dreamforce 2018)

w/ Eric Shih (Director, Product Management, Salesforce)

blogpost

Academic

Presenter, All Things in Moderation Conference @ UCLA - December 2017

Presented THE MODERATION MACHINE, a quantitative study and tool to enable technologists to reflect on their own values alongside user perspectives when creating a anti-harassment policies and building remediation steps into their platforms.